20

Julia Programming for Operations Research 2/e

Changhyun Kwon

Julia Programming for Operations Research

Second Edition

Published by Changhyun Kwon

Copyright © 2019–2021 by Changhyun Kwon

All Rights Reserved.

The author maintains a free online version at https://juliabook.chkwon.net. Paper and electronic versions are available at https://www.chkwon.net/julia and can be purchased at Amazon, Google Play, etc.

Cover Design by Joo Yeon Woo / https://www.joowoo.net

Cat Drawing by Bomin Kwon

version 2022/10/05 00:29:58

Contents

- Preface

- Chapter 1 Introduction and Installation

- Chapter 2 Simple Linear Optimization

- Chapter 3 Basics of the Julia Language

- Chapter 4 Selected Topics in Numerical Methods

- Chapter 5 The Simplex Method

- Chapter 6 Network Optimization Problems

- Chapter 7 Interior Point Methods

- Chapter 8 Nonlinear Optimization Problems

- Chapter 9 Monte Carlo Methods

- Chapter 10 Lagrangian Relaxation

- Chapter 11 Complementarity Problems

- Chapter 12 Parameters in Optimization Solvers

Preface

The main motivation of writing this book was to help myself. I am a professor in the field of operations research, and my daily activities involve building models of mathematical optimization, developing algorithms for solving the problems, implementing those algorithms using computer programming languages, experimenting with data, etc. Three languages are involved: human language, mathematical language, and computer language. My students and I need to go over three different languages. We need “translation” among the three languages.

When my students seek help on the tasks of “translation,” I often provide them with my prior translation as an example or find online resources that may be helpful to them. If students have proper background with proper mathematical education, sufficient computer programming experience, and good understanding of how numerical computing works, students can learn easier and my daily tasks in research and education would go smoothly.

To my frustration, however, many graduate students in operations research take long time to learn how to “translate.” This book is to help them and help me to help them.

I’m neither a computer scientist nor a software engineer. Therefore, this book does not teach the best translation. Instead, I’ll try to teach how one can finish some common tasks necessary in research and development works arising in the field of operations research and management science. It will be just one translation, not the best for sure. But after reading this book, readers will certainly be able to get things done, one way or the other.

What this book teaches

This book is neither a textbook in numerical methods, a comprehensive introductory book to Julia programming, a textbook on numerical optimization, a complete manual of optimization solvers, nor an introductory book to computational science and engineering—it is a little bit of all.

This book will first teach how to install the Julia Language itself. This book teaches a little bit of syntax and standard libraries of Julia, a little bit of programming skills using Julia, a little bit of numerical methods, a little bit of optimization modeling, a little bit of Monte Carlo methods, a little bit of algorithms, and a little bit of optimization solvers.

This book by no means is complete and cannot serve as a standalone textbook for any of the above-mentioned topics. In my opinion, it is best to use this book along with other major textbooks or reference books in operations research and management science. This book assumes that readers are already familiar with topics in optimization theory and algorithms or are willing to learn by themselves from other references. Of course, I provide the best references of my knowledge to each topic.

After reading this book and some coding exercises, readers should be able to search and read many other technical documents available online. This book will just help the first step to computing in operations research and management science. This book is literally a primer on computing.

How this book can be used

This book will certainly help graduate students (and their advisors) for tasks in their research. First year graduate students may use this book as a tutorial that guides them to various optimization solvers and algorithms available. This book will also be a companion through their graduate study. While students take various courses during their graduate study, this book will be always a good starting point to learn how to solve certain optimization problems and implement algorithms they learned. Eventually, this book can be a helpful reference for their thesis research.

Advanced graduate students may use this book as a reference. For example, when they need to implement a Lagrangian relaxation method for their own problem, they can refer to a chapter in this book to see how I did it and learn how they may be able to do it.

It is also my hope that this book can be used for courses in operations research, analytics, linear programming, nonlinear programming, numerical optimization, network optimization, management science, and transportation engineering, as a supplementary textbook. If there is a short course with 1 or 2 credit hours for teaching numerical methods and computing tools in operations research and management science, this book can be primary or secondary textbook, depending on the instructor’s main focus.

Notes to advanced programmers

If you are already familiar with computing and at least one computer programming language, I don’t think this book will have much value for you. There are many resources available on the web, and you will be able to learn about the Julia Language and catch up with the state-of-the-art easily. If you want to learn and catch up even faster with much less troubles, this book can be helpful.

I had some experiences with MATLAB and Java before learning Julia. Learning Julia was not very difficult, but exciting and fun. I just needed a good “excuse” to learn and use Julia. Check what my excuse was in the first chapter.

Acknowledgment

I sincerely appreciate all the efforts from Julia developers. The Julia Language is a beautiful language that I love very much. It changed my daily computing life completely. I am thankful to the developers of the JuMP and other related packages. After JuMP, I no longer look for better modeling languages. I am also grateful to Joo Yeon Woo for the cover design and Bomin Kwon for the cat drawing.

Tampa, Florida

Changhyun Kwon

Chapter 1 Introduction and Installation

This chapter will introduce what the Julia Language is and explain why I love it. More importantly, this chapter will teach you how to obtain Julia and install it in your machine. Well, at this moment, the most challenging task for using Julia in computing would probably be installing the language and other libraries and programs correctly in your own machine. I will go over every step with fine details with screenshots for both Windows and Mac machines. I assumed that Linux users can handle the installation process well enough without much help from this book by reading online manuals and googling. Perhaps the Mac section could be useful to Linux users.

All Julia codes in this book are shared as a git repository and are available at the book website: http://www.chkwon.net/julia. Codes are tested with

Julia v1.3.0JuMP v0.21.2Optim v0.20.6

I will introduce what JuMP and Optim are gradually later in the book.

1.1 What is Julia and Why Julia?

The Julia Language is a young emerging language, whose primary target is technical computing. It is developed for making technical computing more fun and more efficient. There are many good things about the Julia Language from the perspective of computer scientists and software engineers; you can read about the language at the official website1.

Here is a quote from the creators of Julia from their first official blog article “Why We Created Julia”2:

“We want a language that’s open source, with a liberal license. We want the speed of C with the dynamism of Ruby. We want a language that’s homoiconic, with true macros like Lisp, but with obvious, familiar mathematical notation like Matlab. We want something as usable for general programming as Python, as easy for statistics as R, as natural for string processing as Perl, as powerful for linear algebra as Matlab, as good at gluing programs together as the shell. Something that is dirt simple to learn, yet keeps the most serious hackers happy. We want it interactive and we want it compiled.

(Did we mention it should be as fast as C?)”

So this is how Julia was created, to serve all above greedy wishes.

Let me tell you my story. I used to be a Java developer for a few years before I joined a graduate school. My first computer codes for homework assignments and course projects were naturally written in Java; even before then, I used C for my homework assignments for computing when I was an undergraduate student. Later, in the graduate school, I started using MATLAB, mainly because my fellow graduate students in the lab were using MATLAB. I needed to learn from them, so I used MATLAB.

I liked MATLAB. Unlike in Java and C, I don’t need to declare every single variable before I use it; I just use it in MATLAB. Arrays are not just arrays in the computer memory; arrays in MATLAB are just like vectors and matrices. Plotting computation results is easy. For modeling optimization problems, I used GAMS and connected with solvers like CPLEX. While the MATLAB-GAMS-CPLEX chain suited my purpose well, I wasn’t that happy with the syntax of GAMS—I couldn’t fully understand—and the slow speed of the interface between GAMS and MATLAB. While CPLEX provides complete connectivities with C, Java, and Python, it was very basic with MATLAB.

When I finished with my graduate degree, I seriously considered Python. It was—and still is—a very popular choice for many computational scientists. CPLEX also has a better support for Python than MATLAB. Unlike MATLAB, Python is a free and open source language. However, I didn’t go with Python and decided to stick with MATLAB. I personally don’t like \( 0 \) being the first index of arrays in C and Java. In Python, it is also \( 0 \). In MATLAB, it is \( 1 \). For example, if we have a vector like:

it may be written in MATLAB as:

v = [1; 0; 3; -1]

The first element of this vector should be accessible by v(1), not v(0). The \( i \)-th element must be v(i), not v(i-1). So I stayed with MATLAB.

Later in 2012, the Julia Language was introduced and it looked attractive to me, since at least the array index begins with \( 1 \). After some investigations, I still didn’t move to Julia at that time. It was ugly in supporting optimization modeling and solvers. I kept using MATLAB.

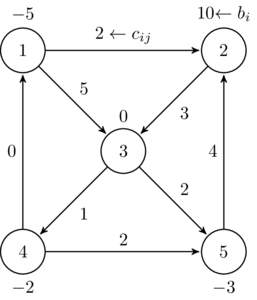

In 2014, I came across several blog articles and tweets talking about Julia again. I gave it one more look. Then I found a package for modeling optimization problems in Julia, called JuMP—Julia for Mathematical Programming. After spending a few hours, I fell in love with JuMP and decided to go with Julia, well more with JuMP. Here is a part of my code for solving a network optimization problem:

@variable(m, 0<= x[links] <=1)

@objective(m, Min, sum(c[(i,j)] * x[(i,j)] for (i,j) in links) )

for i=1:no_node

@constraint(m, sum(x[(ii,j)] for (ii,j) in links if ii==i )

- sum(x[(j,ii)] for (j,ii) in links if ii==i ) == b[i])

end

optimize!(m)

This is indeed a direct “translation” of the following mathematical language:

subject to

I think it is a very obvious translation. It is quite beautiful, isn’t it?

CPLEX and its competitor Gurobi are also very smoothly connected with Julia via JuMP. Why should I hesitate? After several years of using Julia, I still love it—I even wrote a book.

1.2 Installing Julia

Graduate students and researchers are strongly recommended to install Julia in their local computers. In this guide, we will first install Julia and then install two optimization packages, JuMP and GLPK. JuMP stands for ‘Julia for Mathematical Programming’, which is a modeling language for optimization problems. GLPK is an open-source linear optimization solver that can solve both continuous and discrete linear programs. Windows users go to Section 1.2.1, and Mac users go to Section 1.2.2.

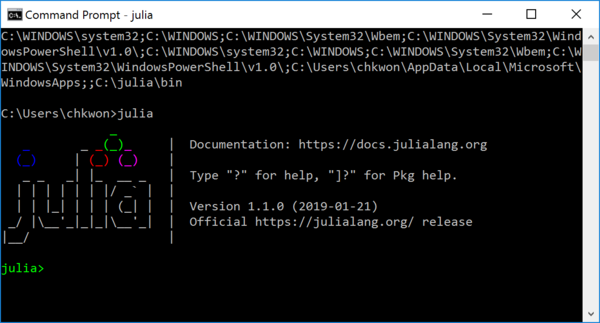

1.2.1 Installing Julia in Windows

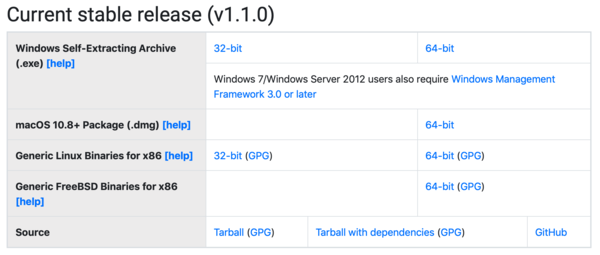

- Step 1. Download Julia from the official website.3 (Select an appropriate version: 32-bit or 64-bit. 64-bit recommended whenever possible.)

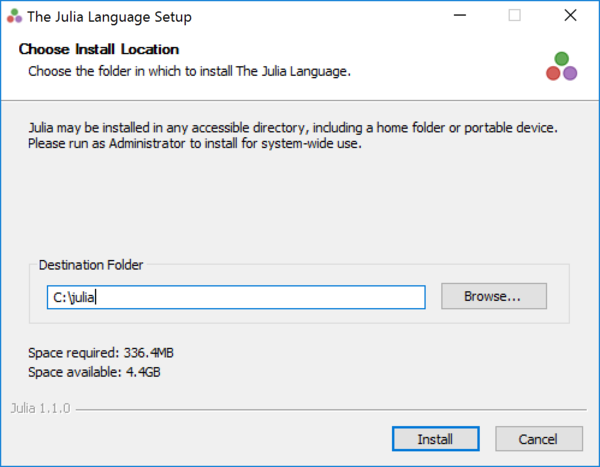

- Step 2. Install Julia in

C:\julia. (You need to make the installation folder consistent with the path you set in Step 3.)

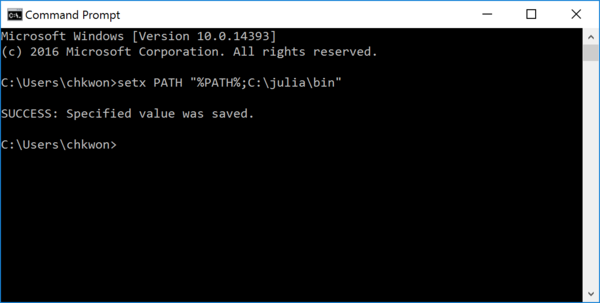

- Step 3. Open a Command Prompt and enter the following command:

setx PATH "%PATH%;C:\julia\bin"

If you do not know how to open a Command Prompt, just google ‘how to open command prompt windows.’

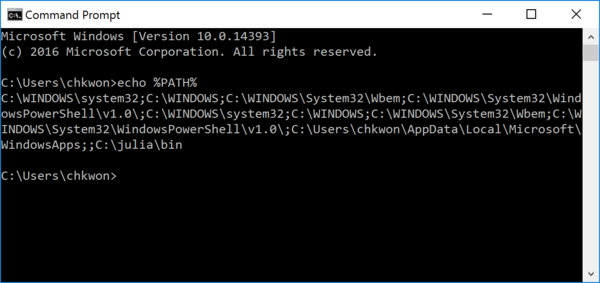

- Step 4. Open a NEW command prompt and type

echo %PATH%

The output must include

C:\julia\binin the end. If not, you must have something wrong. - Step 5. Run

julia.

You have successfully installed the Julia Language on your Windows computer. Now it is time to install additional packages for mathematical optimization.

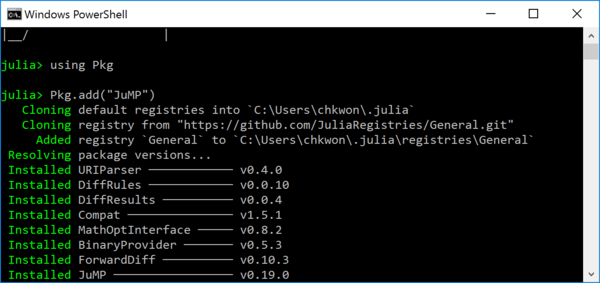

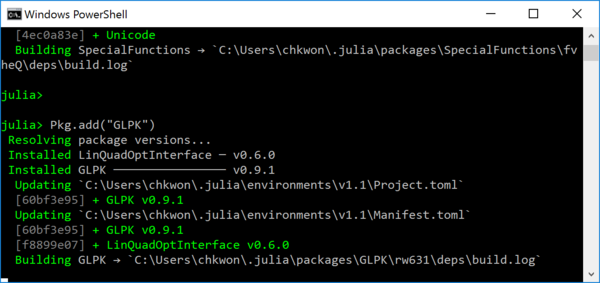

- Step 6. In your Julia prompt, type

Installing the first package can take long time, because it initializes your Julia package folder and synchronizes with the entire package list.

julia> using Pkg julia> Pkg.add("JuMP") julia> Pkg.add("GLPK")

- Step 7. Open Notepad (or any other text editor such as Visual Studio Code4) and type the following, and save the file as

script.jlin some folder of your choice.using JuMP, GLPK m = Model(GLPK.Optimizer) @variable(m, 0 <= x <= 2 ) @variable(m, 0 <= y <= 30 ) @objective(m, Max, 5x + 3*y ) @constraint(m, 1x + 5y <= 3.0 ) JuMP.optimize!(m) println("Objective value: ", JuMP.objective_value(m)) println("x = ", JuMP.value(x)) println("y = ", JuMP.value(y))

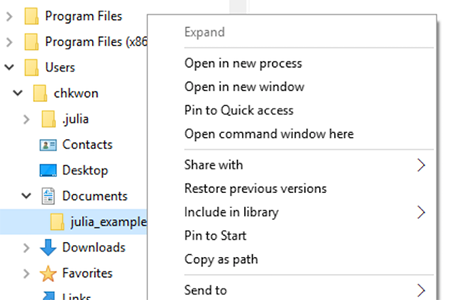

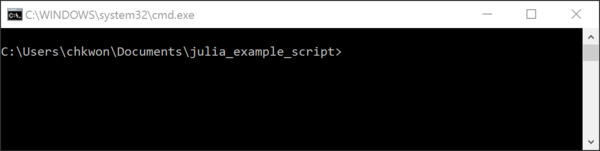

- Step 8. Press and hold your

ShiftKey and right-click the folder name, and choose “Open command window here.”

- Step 9. Type

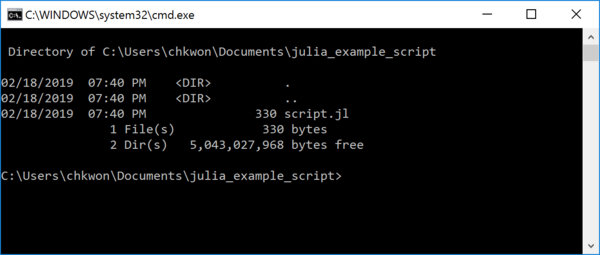

dirto see your script filescript.jl.

If you see a filename such as

script.jl.txt, use the following command to rename:ren script.jl.txt script.jl - Step 10. Type

julia script.jlto run your julia script.

After a few seconds, the result of your julia script will be printed. Done.

Please proceed to Section 1.2.3.

1.2.2 Installing Julia in macOS

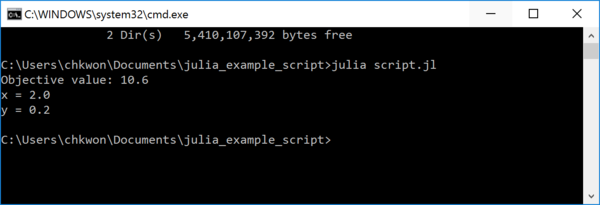

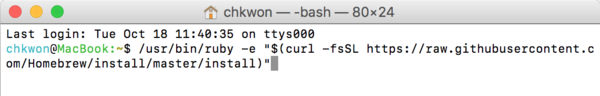

In macOS, we will use a package manager, called Homebrew. It provides a very convenient way of installing software in macOS.

- Step 1. Open “Terminal.app” from your Applications folder. (If you do not know how to open it, see this video.5 It is convenient to place “Terminal.app” in your dock.

- Step 2. Visit http://brew.sh and follow the instruction to install Homebrew. It may ask you to enter your password to install Xcode Command Line Tools.

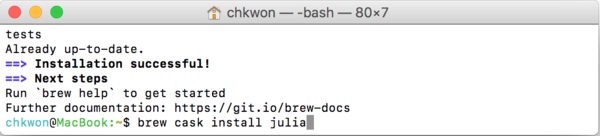

- Step 3. Installing Julia using Homebrew: In your terminal, enter the following command:

brew cask install julia

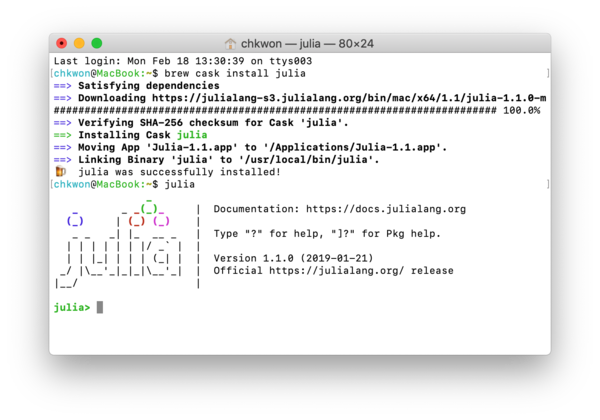

- Step 5. In your terminal, enter

julia.

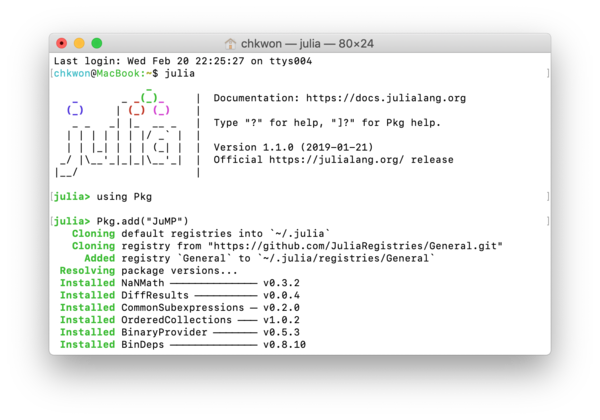

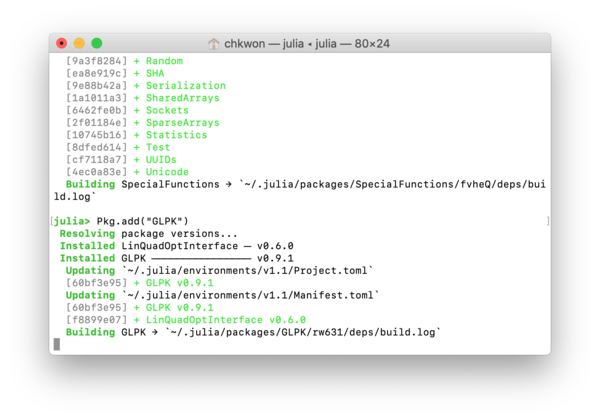

- Step 6. In your Julia prompt, type

Installing the first package can take a long time, because it initializes your Julia package folder and synchronizes with the entire package list.

julia> using Pkg julia> Pkg.add("JuMP") julia> Pkg.add("GLPK")

- Step 7. Open TextEdit (or any other text editor such as Visual Studio Code6) and type the following, and save the file as

script.jlin some folder of your choice.using JuMP, GLPK m = Model(GLPK.Optimizer) @variable(m, 0 <= x <= 2 ) @variable(m, 0 <= y <= 30 ) @objective(m, Max, 5x + 3*y ) @constraint(m, 1x + 5y <= 3.0 ) JuMP.optimize!(m) println("Objective value: ", JuMP.objective_value(m)) println("x = ", JuMP.value(x)) println("y = ", JuMP.value(y))

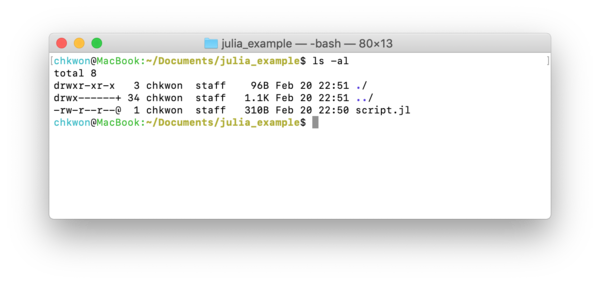

- Step 8. Open a terminal window7 at the folder that contains your

script.jl. - Step 9. Type

ls –alto check your script file.

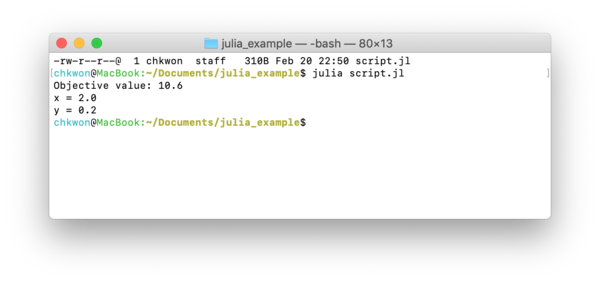

- Step 10. Type

julia script.jlto run your script.

After a few seconds, the result of your julia script will be printed. Done.

Please proceed to Section 1.2.3.

1.2.3 Running Julia Scripts

When you are ready, there are basically two methods to run your Julia script:

- In your Command Prompt or Terminal, enter

C:> julia your-script.jl - In your Julia prompt, enter

julia> include("your-script.jl").

1.2.4 Installing Gurobi

Instead of GLPK, one can use Gurobi, which is a commercial optimization solver package for solving LP, MILP, QP, MIQP, etc. Gurobi is free for students, teachers, professors, or anyone else related to educational organizations.

To install, follow these steps:

- Download Gurobi Optimizer8 and install in your computer. (You will need to register as an academic user.)

- Request a free academic license9 and follow their instructions to activate it.

- Run Julia and add the

Gurobipackage. You need to tell Julia where Gurobi is installed:On Windows:

julia> ENV["GUROBI_HOME"] = "C:\\Program Files\\gurobi910\\win64" julia> using Pkg julia> Pkg.add("Gurobi")

On macOS:

julia> ENV["GUROBI_HOME"] = "/Library/gurobi910/mac64" julia> using Pkg julia> Pkg.add("Gurobi")

- Ready. Test the following code:

using JuMP, Gurobi m = Model(Gurobi.Optimizer) @variable(m, x <= 5) @variable(m, y <= 45) @objective(m, Max, x + y) @constraint(m, 50x + 24y <= 2400) @constraint(m, 30x + 33y <= 2100) JuMP.optimize!(m) println("Objective value: ", JuMP.objective_value(m)) println("x = ", JuMP.value(x)) println("y = ", JuMP.value(y))

1.2.5 Installing CPLEX

Instead of Gurobi, you can install and connect the CPLEX solver, which is also free to academics.

You can follow this step by step guide to install:

- Go to the IBM ILOG CPLEX Optimization Studio page10.

- Click ‘Access free academic edition.’

- Log in with your institution email and certify.

- Download an appropriate version of IBM ILOG CPLEX Optimization Studio. It should be v12.10 or higher.

- Run the downloaded file and install CPLEX. I recommend using the default installation folder.

- Add the

CPLEXpackage in Julia. You have to tell Julia where the CPLEX library is installed.On Windows:

julia> ENV["CPLEX_STUDIO_BINARIES"] = "C:\\Program Files\\CPLEX_Studio1210\\cplex\\bin\\x86-64_win\\" julia> using Pkg julia> Pkg.add("CPLEX") julia> Pkg.build("CPLEX")

On macOS:

julia> ENV["CPLEX_STUDIO_BINARIES"] = "/Applications/CPLEX_Studio1210/cplex/bin/x86-64_osx/" julia> using Pkg julia> Pkg.add("CPLEX") julia> Pkg.build("CPLEX")

- Ready. Test the following code:

using JuMP, CPLEX m = Model(CPLEX.Optimizer) @variable(m, x <= 5) @variable(m, y <= 45) @objective(m, Max, x + y) @constraint(m, 50x + 24y <= 2400) @constraint(m, 30x + 33y <= 2100) JuMP.optimize!(m) println("Objective value: ", JuMP.objective_value(m)) println("x = ", JuMP.value(x)) println("y = ", JuMP.value(y))

1.3 Installing IJulia

You can also use an interactive Julia environment in your local computer, called Jupyter Notebook11. Well, at first there was IPython notebook that was an interactive programming environment for the Python language. It has been popular, and now it is extended to cover many other languages such as R, Julia, Ruby, etc. The extension became the Jupyter Notebook project. For Julia, it is called IJulia, following the naming convention of IPython.

To use IJulia, we need a distribution of Python and Jupyter. Julia can automatically install a distribution for you, unless you want to install it by yourself. If you let Julia install Python and Jupyter, they will be private to Julia, i.e. you will not be able to use Python and Jupyter outside of Julia.

The following process will automatically install Python and Jupyter.

- Open a new terminal window and run Julia. Initialize environment variables:

julia> ENV["PYTHON"] = "" "" julia> ENV["JUPYTER"] = "" ""

- Install

IJulia:julia> using Pkg julia> Pkg.add("IJulia")

- To open the

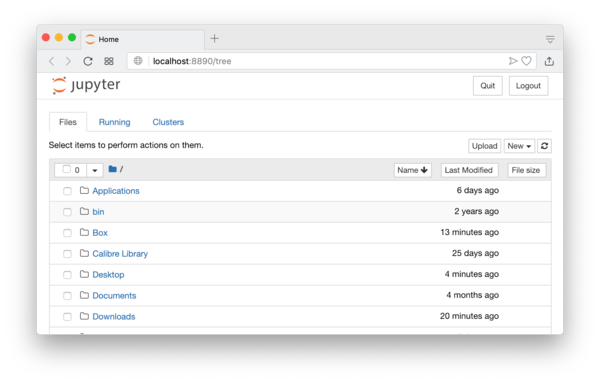

IJulianotebook in your web browser:julia> using IJulia julia> notebook()

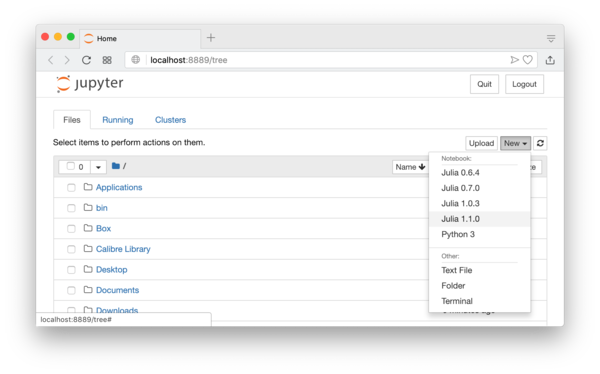

It will open a webpage in your browser that looks like the following screenshot:

The current folder will be your home folder. You can move to another folder and also create a new folder by clicking the “New” button on the top-right corner of the screen. After locating a folder you want, you can now create a new IJulia notebook by clicking the “New” button again and select the julia version of yours, for example “Julia 1.1.0”. See Figure 1.1.

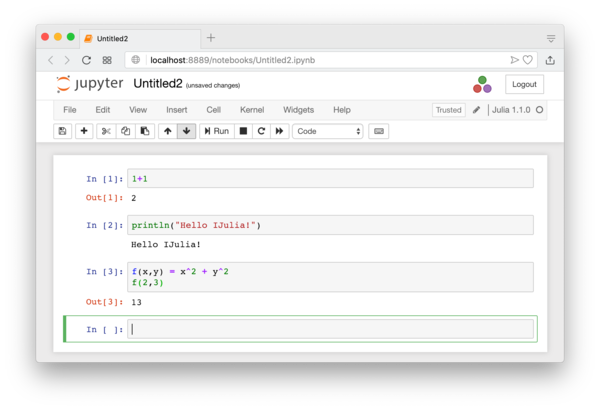

It will basically open an interactive session of the Julia Language. If you have used Mathematica or Maple, the interface will look familiar. You can test basic Julia commands. When you need to evaluate a block of codes, press Shift+Enter, or press the “play” button. See Figure 1.2.

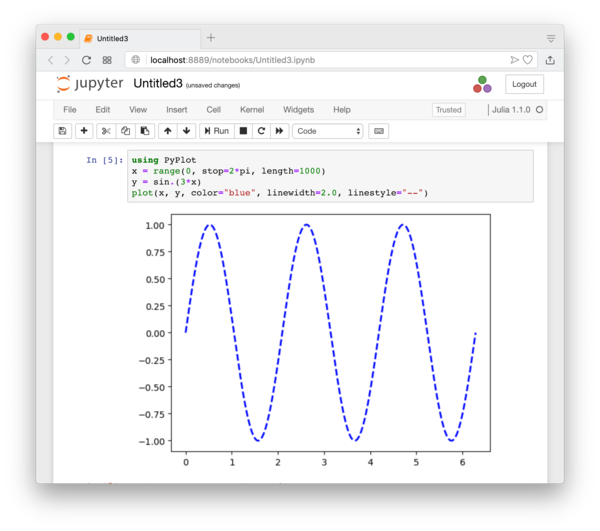

If you properly install a plotting package like PyPlot (details in Section 3.10.1), you can also do plotting directly within the IJulia notebook as shown in Figure 1.4.

Personally, I prefer the REPL for most tasks, but I do occasionally use IJulia, especially when I need to test some simple things and need to plot the result quickly, or when I need to share the result of Julia computation with someone else. (IJulia can export the notebook in various formats, including HTML and PDF.)

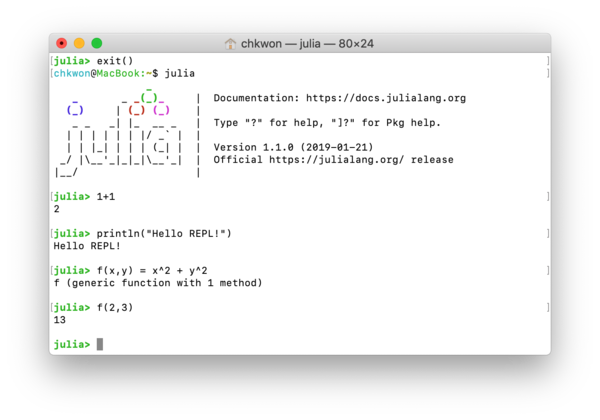

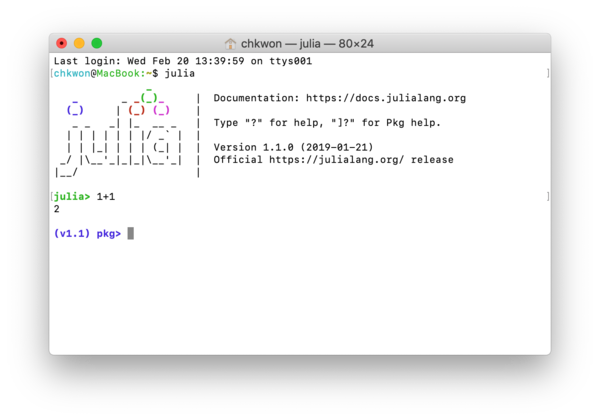

What is REPL? It stands for read-eval-print loop. It is the Julia session that runs in your terminal; see Figure 1.3, which must look familiar to you already.

1.4 Package Management

There are many useful packages in Julia and we rely many parts of our computations on packages. If you have followed my instructions to install Julia, JuMP, Gurobi, and CPLEX, you have already installed a few packages. There are some more commands that are useful in managing packages.

julia> using Pkg

julia> Pkg.add("PackageName")

This installs a package, named PackageName. To find its online repository, you can just google the name PackageName.jl, and you will be directed to a repository hosted at GitHub.com.

Using Pkg.add requires using Pkg first. In REPL, by pressing the ‘]’ key, you can enter the package management mode (Figure 1.5) and the prompt will change as follows:

(v1.3) pkg>

Then to install a package you can simply enter:

(v1.3) pkg> add PackageName

To install the JuMP package, you can do:

(v1.3) pkg> add JuMP

To come back to the julia prompt, press the backspace or delete key.

julia> Pkg.rm("PackageName")

(v1.3) pkg> rm PackageName

This removes the package.

julia> Pkg.update()

(v1.3) pkg> update

This updates all packages that are already installed in your machine to the most recent versions.

julia> Pkg.status()

(v1.3) pkg> status

This displays what packages are installed and what their versions are. If you just want to know the version of a specific package, you can do:

julia> Pkg.installed()["PackageName"]

julia> Pkg.build("PackageName")

(v1.3) pkg> build PackageName

Occasionally, installing a package will fail during the Pkg.add("PackageName") process, usually because some libraries are not installed or system path variables are not configured correctly. Try to install some required libraries again and check the system path variables first. Then you may need to reboot your system or restart your Julia session. Then Pkg.build("PackageName"). Since you have downloaded package files during Pkg.build("PackageName"), you don’t need to download them again; you just build it again.

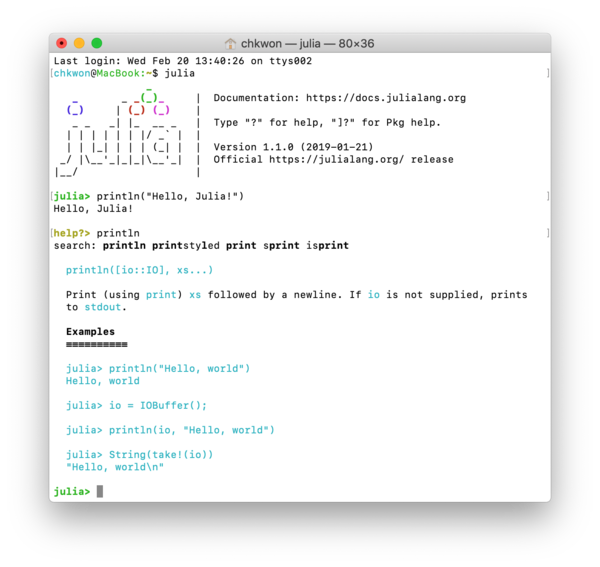

1.5 Help

In REPL, you can use the Help mode. By pressing the ? key in REPL, you can enter the help mode. The prompt will change as follows:

help?>

Then type in any function name, for example, println, which results in:

help?> println

search: println printstyled print sprint isprint

println([io::IO], xs...)

Print (using print) xs followed by a newline. If io is not supplied, prints to

stdout.

Examples

≡≡≡≡≡≡≡≡≡≡

julia> println("Hello, world")

Hello, world

julia> io = IOBuffer();

julia> println(io, "Hello, world")

julia> String(take!(io))

"Hello, world\n"

See also Figure 1.6.

Readers can find codes and other helpful resources in the author’s website at

which also includes a link to a Facebook page of this book for discussion and communication.

This book does not teach everything of the Julia Language—only a very small part of it. When you want to learn more about the language, the first place you need to visit is

where many helpful books, tutorials, videos, and articles are listed. Also, you will need to visit the official documentation of the Julia Language at

which I think serves as a good tutorial as well.

When you have a question, there will be many Julia enthusiasts ready for you. For questions and discussion, visit

and

You can also ask questions at http://stackoverflow.com with tag julia-lang.

The webpage of JuMP is worth visiting for information about the JuMP.jl package.

Chapter 2 Simple Linear Optimization

This chapter provides a quick guide for solving simple linear optimization problems. For modeling, we use the JuMP package, and for computing, we use one of the following solvers.

- Clp: an open-source solver for linear programming (LP) problems from COIN-OR.

- Cbc: an open-source solver for mixed integer linear programming (MILP) problems from COIN-OR.

- GLPK: an open-source solver for mixed integer linear programming problem (MILP) problems from GNU.

- Gurobi: a commercial solver for both LP and MILP, free for academic users

- CPLEX: a commercial solver for both LP and MILP, free for academic users

Open-source solvers Clp, Cbc, and GLPK can be obtained by simply installing the corresponding Julia packages:

julia> using Pkg

julia> Pkg.add("Clp")

julia> Pkg.add("Cbc")

julia> Pkg.add("GLPK")

In fact, the Clp package automatically installs the Cbc package. COIN-OR is an open-source initiative, titled “Computational Infrastructure for Operations Research.”

For commercial solvers Gurobi and CPLEX, one must first install the solver software, and then install the corresponding Julia packages:

julia> using Pkg

julia> Pkg.add("Gurobi")

julia> Pkg.add("CPLEX")

There are a couple of things to do before you add Julia packages. See Sections 1.2.4 and 1.2.5 for the details.

There are some alternatives available, both open-source and commercial solvers. See the list of available solvers via JuMP1. Nonlinear optimization solvers will be discussed in Chapter 8.

2.1 Linear Programming (LP) Problems

Once you have installed the JuMP package and an optimization solver mentioned above, we can have Julia solve linear programming (LP) and mixed integer linear programming (MILP) problems easily. For example, consider the following LP problem:

subject to

Using Julia and JuMP, we can write the following code:

code/chap2/LP1.jl

using JuMP, GLPK

# Preparing an optimization model

m = Model(GLPK.Optimizer)

# Declaring variables

@variable(m, 0<= x1 <=10)

@variable(m, x2 >=0)

@variable(m, x3 >=0)

# Setting the objective

@objective(m, Max, x1 + 2x2 + 5x3)

# Adding constraints

@constraint(m, constraint1, -x1 + x2 + 3x3 <= -5)

@constraint(m, constraint2, x1 + 3x2 - 7x3 <= 10)

# Printing the prepared optimization model

print(m)

# Solving the optimization problem

JuMP.optimize!(m)

# Printing the optimal solutions obtained

println("Optimal Solutions:")

println("x1 = ", JuMP.value(x1))

println("x2 = ", JuMP.value(x2))

println("x3 = ", JuMP.value(x3))

# Printing the optimal dual variables

println("Dual Variables:")

println("dual1 = ", JuMP.shadow_price(constraint1))

println("dual2 = ", JuMP.shadow_price(constraint2))

The above code is pretty much self-explanatory, but here are some explanations. We first declare a placeholder for an optimization model:

m = Model(GLPK.Optimizer)

where we also indicated that we want to use the GLPK optimization solver. We call the model m.

We declare three variables:

@variable(m, 0<= x1 <=10)

@variable(m, x2 >= 0)

@variable(m, x3 >= 0)

where we used ‘macros’ from the JuMP package, @variable. In Julia, macros do repeated jobs for you. It is somewhat similar to ‘functions’ with some important differences. Refer to the official documentation2.

Using another macro @objective, we set the objective:

@objective(m, Max, x1 + 2x2 + 5x3)

Two constraints are added by the @constraint macro:

@constraint(m, constraint1, -x1 + x2 + 3x3 <= -5)

@constraint(m, constraint2, x1 + 3x2 - 7x3 <= 10)

Note that constraint1 and constraint2 are the names of those constraints. These names will be useful for obtaining the corresponding dual variable values.

We are now ready with the optimization problem. If you like you can print the optimization model and check how it is written, the code is as simple as:

print(m)

We solve the optimization problem:

JuMP.optimize!(m)

After solving the optimization problem, we can obtain the values of variables at the optimality by using the JuMP.value() function:

println("Optimal Solutions:")

println("x1 = ", JuMP.value(x1))

println("x2 = ", JuMP.value(x2))

println("x3 = ", JuMP.value(x3))

where println() is a function that puts some text in a line on the screen. If you don’t want to change the line after you print the text, use the print() function instead.

To obtain the values of optimal dual variables, call JuMP.shadow_price() with the corresponding constraint names as follows:

println("Dual Variables:")

println("dual1 = ", JuMP.shadow_price(constraint1))

println("dual2 = ", JuMP.shadow_price(constraint2))

IMPORTANT: There is also the JuMP.dual() function defined. However, the sign of JuMP.dual() results might not be as you would expect, since it follows the convention of conic duality. For linear optimization problems, JuMP.shadow_price() provides dual variable values as defined in most standard textbooks. Please refer to the relevant discussion in the JuMP documentation3.

In my machine, the output by Gurobi looks like:

julia> include("LP1.jl")

Max x1 + 2 x2 + 5 x3

Subject to

x1 ≥ 0.0

x2 ≥ 0.0

x3 ≥ 0.0

x1 ≤ 10.0

-x1 + x2 + 3 x3 ≤ -5.0

x1 + 3 x2 - 7 x3 ≤ 10.0

Optimal Solutions:

x1 = 10.0

x2 = 2.1875

x3 = 0.9375

Dual Variables:

dual1 = 1.8125

dual2 = 0.06249999999999998

If you want to use the Gurobi optimization solver instead of GLPK, use the following inputs:

using JuMP, Gurobi

m = Model(Gurobi.Optimizer)

For CPLEX:

using JuMP, CPLEX

m = Model(CPLEX.Optimizer)

There are many other optimization solvers supported by the JuMP package. See the manual of JuMP for a list.4

2.2 Alternative Ways of Writing LP Problems

We can use arrays to define variables. For the same LP problem as in the previous section, we can write a Julia code alternatively as follows:

To define the variable \( \vect{x} \) as a three-dimensional vector, we can write:

@variable(m, x[1:3] >= 0)

where 1:3 means an array with numbers from 1 to 3 (incrementing by 1).

Then we prepare a column vector \( \vect{c} \) and use it for defining the objective function:

c = [1; 2; 5]

@objective(m, Max, sum( c[i]*x[i] for i in 1:3))

which is essentially same as:

In LP problems, constraints are usually written in the vector-matrix notation as \( \mat{A} \vect{x} \leq \vect{b} \). Following this convention, we prepare a matrix \( \mat{A} \) and a vector \( \vect{b} \), and use them for adding constraints:

A = [-1 1 3;

1 3 -7]

b = [-5; 10]

@constraint(m, constraint1, sum( A[1,i]*x[i] for i in 1:3) <= b[1] )

@constraint(m, constraint2, sum( A[2,i]*x[i] for i in 1:3) <= b[2] )

This will be impractical, if we have 100 constraints, instead of just 2. Alternatively, we can write:

constraint = Dict()

for j in 1:2

constraint[j] = @constraint(m, sum(A[j,i]*x[i] for i in 1:3) <= b[j])

end

Even better, we can also write:

@constraint(m, constraint[j in 1:2], sum(A[j,i]*x[i] for i in 1:3) <= b[j])

Use any form that works for you. The JuMP package provides many different methods of adding constraints. Read the official document.5

Finally, we add the bound constraint on \( x_1 \):

@constraint(m, bound, x[1] <= 10)

The final code is presented:

code/chap2/LP2.jl

using JuMP, GLPK

m = Model(GLPK.Optimizer)

c = [ 1; 2; 5]

A = [-1 1 3;

1 3 -7]

b = [-5; 10]

@variable(m, x[1:3] >= 0)

@objective(m, Max, sum( c[i]*x[i] for i in 1:3) )

@constraint(m, constraint[j in 1:2], sum( A[j,i]*x[i] for i in 1:3 ) <= b[j] )

@constraint(m, bound, x[1] <= 10)

JuMP.optimize!(m)

println("Optimal Solutions:")

for i in 1:3

println("x[$i] = ", JuMP.value(x[i]))

end

println("Dual Variables:")

for j in 1:2

println("dual[$j] = ", JuMP.shadow_price(constraint[j]))

end

Note that there have been changes in the code for printing. The result looks like:

julia> include("LP2.jl")

Optimal Solutions:

x[1] = 10.0

x[2] = 2.1875

x[3] = 0.9375

Dual Variables:

dual[1] = 1.8125

dual[2] = 0.06250000000000003

2.3 Yet Another Way of Writing LP Problems

In LP2.jl, we used 1:3 for indices for \( x_i \) and 1:2 for indices of constraints. If you want to change the data and solve another problem with the same structure, then you will have to change the numbers 3 and 2 manually, which of course is very tedious and will most likely create unwanted bugs. Instead, we can assign names for those sets of indices:

index_x = 1:3

index_constraints = 1:2

Then, you can rewrite the code for adding constraints, for example:

@constraint(m, constraint[j in index_constraints],

sum( A[j,i]*x[i] for i in index_x ) <= b[j] )

The complete code would look like:

code/chap2/LP3.jl

using JuMP, GLPK

m = Model(GLPK.Optimizer)

c = [ 1; 2; 5]

A = [-1 1 3;

1 3 -7]

b = [-5; 10]

index_x = 1:3

index_constraints = 1:2

@variable(m, x[index_x] >= 0)

@objective(m, Max, sum( c[i]*x[i] for i in index_x) )

@constraint(m, constraint[j in index_constraints],

sum( A[j,i]*x[i] for i in index_x ) <= b[j] )

@constraint(m, bound, x[1] <= 10)

JuMP.optimize!(m)

println("Optimal Solutions:")

for i in index_x

println("x[$i] = ", JuMP.value(x[i]))

end

println("Dual Variables:")

for j in index_constraints

println("dual[$j] = ", JuMP.shadow_price(constraint[j]))

end

The result of LP3.jl should be same of that of LP2.jl.

2.4 Mixed Integer Linear Programming (MILP) Problems

In many applications, variables are often binary or discrete; the resulting optimization problem then becomes an integer programming problem. Further if everything is linear and there are both continuous variables and integer variables, the optimization problem is called a mixed integer linear programming (MILP) problem. The Gurobi and CPLEX optimization solvers can handle this type of problem very well.

Suppose now \( x_2 \) is an integer variable and \( x_3 \) is a binary variable in the previous LP problem. That is:

subject to

Using JuMP, it is very simple to specify integer and binary variables. We can define variables as follows:

@variable(m, 0<= x1 <=10)

@variable(m, x2 >=0, Int)

@variable(m, x3, Bin)

The complete code would look like:

code/chap2/MILP1.jl

using JuMP, GLPK

# Preparing an optimization model

m = Model(GLPK.Optimizer)

# Declaring variables

@variable(m, 0<= x1 <=10)

@variable(m, x2 >=0, Int)

@variable(m, x3, Bin)

# Setting the objective

@objective(m, Max, x1 + 2x2 + 5x3)

# Adding constraints

@constraint(m, constraint1, -x1 + x2 + 3x3 <= -5)

@constraint(m, constraint2, x1 + 3x2 - 7x3 <= 10)

# Printing the prepared optimization model

print(m)

# Solving the optimization problem

JuMP.optimize!(m)

# Printing the optimal solutions obtained

println("Optimal Solutions:")

println("x1 = ", JuMP.value(x1))

println("x2 = ", JuMP.value(x2))

println("x3 = ", JuMP.value(x3))

The result looks like:

julia> include("MILP1.jl")

Max x1 + 2 x2 + 5 x3

Subject to

x3 binary

x2 integer

x1 ≥ 0.0

x2 ≥ 0.0

x1 ≤ 10.0

-x1 + x2 + 3 x3 ≤ -5.0

x1 + 3 x2 - 7 x3 ≤ 10.0

Optimal Solutions:

x1 = 10.0

x2 = 2.0

x3 = 1.0

Chapter 3 Basics of the Julia Language

In this chapter, I cover how we can do most common tasks for computing in operations research and management science with the Julia Language. While I will cover some part of the syntax of Julia, readers must consult with the official documentation1 of Julia for other unexplained usages.

3.1 Vector, Matrix, and Array

Like MATLAB and many other computer languages for numerical computation, Julia provides easy and convenient, but strong, ways of handling vectors and matrices. For example, if you want to create vectors and matrices like

then in Julia, you can simply type

a = [1; 2; 3]

b = [4 5 6]

A = [1 2 3; 4 5 6]

where the semicolon (;) means a new row. Julia will return:

julia> a = [1; 2; 3]

3-element Array{Int64,1}:

1

2

3

julia> b = [4 5 6]

1x3 Array{Int64,2}:

4 5 6

julia> A = [1 2 3; 4 5 6]

2x3 Array{Int64,2}:

1 2 3

4 5 6

We can access the \( (i,j) \)-element of \( \mat{A} \) by A[i,j]:

julia> A[1,3]

3

julia> A[2,1]

4

The transpose of vectors and matrices is easily obtained either of the following codes:

julia> transpose(A)

3x2 Array{Int64,2}:

1 4

2 5

3 6

julia> A'

3x2 Array{Int64,2}:

1 4

2 5

3 6

Let us introduce two column vectors:

a = [1; 2; 3]

c = [7; 8; 9]

The inner product, or dot product, may be obtained by the following way:

julia> a'*c

50

Another way is to use the dot() function. This function is provided in a standard library of Julia, called the LinearAlgebra package.

julia> using LinearAlgebra

julia> dot(a,c)

50

For many other useful functions in the LinearAlgebra package, see

the official document2.

The identity matrices of certain sizes:

julia> Matrix(1.0I, 2, 2)

2x2 Array{Float64,2}:

1.0 0.0

0.0 1.0

julia> Matrix(1.0I, 3, 3)

3x3 Array{Float64,2}:

1.0 0.0 0.0

0.0 1.0 0.0

0.0 0.0 1.0

The matrices of zeros and ones of custom sizes:

julia> zeros(4,1)

4x1 Array{Float64,2}:

0.0

0.0

0.0

0.0

julia> zeros(2,3)

2x3 Array{Float64,2}:

0.0 0.0 0.0

0.0 0.0 0.0

julia> ones(1,3)

1x3 Array{Float64,2}:

1.0 1.0 1.0

julia> ones(3,2)

3x2 Array{Float64,2}:

1.0 1.0

1.0 1.0

1.0 1.0

When we have a square matrix

julia> B = [1 3 2; 3 2 2; 1 1 1]

3x3 Array{Int64,2}:

1 3 2

3 2 2

1 1 1

its inverse can be computed:

julia> inv(B)

3x3 Array{Float64,2}:

1.11022e-16 1.0 -2.0

1.0 1.0 -4.0

-1.0 -2.0 7.0

Of course, there are some numerical errors:

julia> B * inv(B)

3x3 Array{Float64,2}:

1.0 0.0 0.0

0.0 1.0 0.0

-2.22045e-16 0.0 1.0

Note that the off-diagonal elements are not exactly zero. This is because the computation of the inverse matrix is not exact. For example, the (2,1)-element of the inverse matrix is not exactly 1, but:

julia> inv(B)[2,1]

1.0000000000000004

In the above, we have seen something like Int64 and Float64. In 32-bit systems, it would have been Int32 and Float32. These are data types. If the elements in your vectors and matrices are integers for sure, you can use Int64. On the other hand, if any element is non-integer values, such as \( 1.0000000000000004 \), you need to use Float64. These are usually done automatically:

julia> a = [1; 2; 3]

3-element Array{Int64,1}:

1

2

3

julia> b = [1.0; 2; 3]

3-element Array{Float64,1}:

1.0

2.0

3.0

In some cases, you will want to first create an array object of a certain type, then assign values. This can be done by calling Array with a keyword undef. For example, if we want an array of Float64 data type and of size 3, then we can do:

julia> d = Array{Float64}(undef, 3)

3-element Array{Float64,1}:

2.287669227e-314

2.2121401076e-314

2.2147848607e-314

Some values that are close to zero are pre-assigned. Now you can assign the value you want:

julia> d[1] = 1

1

julia> d[2] = 2

2

julia> d[3] = 3

3

julia> d

3-element Array{Float64,1}:

1.0

2.0

3.0

Although you entered the exact integer values, the resulting array is of Float64 type, as it was originally defined.

If your array represents a vector or a matrix, I recommend you create an array by explicitly specifying the dimension. For a 3-by-1 column vector, you have to do:

p = Array{Float64}(undef, 3, 1)

and for a 1-by-3 row vector, you have to do:

q = Array{Float64}(undef, 1, 3)

Then the products can be written as p*q or q*p.

For more information on functions related to arrays, please refer to the official document.3

3.2 Tuple

The data type of arrays can be a pair of data types. For example, if we want to store (1,2), (2,3), and (3,4) in an array, we can create an array of type (Int64, Int64).

julia> pairs = Array{Tuple{Int64, Int64}}(undef, 3)

3-element Array{Tuple{Int64,Int64},1}:

(4475364336, 4639719056)

(4616151040, 4596384320)

(0, 4596384320)

julia> pairs[1] = (1,2)

(1, 2)

julia> pairs[2] = (2,3)

(2, 3)

julia> pairs[3] = (3,4)

(3, 4)

julia> pairs

3-element Array{Tuple{Int64,Int64},1}:

(1,2)

(2,3)

(3,4)

This is same as:

julia> pairs = [ (1,2); (2,3); (3,4) ]

3-element Array{(Int64,Int64),1}:

(1, 2)

(2, 3)

(3, 4)

These types of arrays can be useful for handling network data with nodes and links, for example for storing data like \( (i,j) \). When you want \( (i,j,k) \), simply do:

julia> ijk_array = Array{Tuple{Int64, Int64, Int64}}(undef, 3)

3-element Array{Tuple{Int64,Int64,Int64},1}:

(4634730720, 4634730640, 1)

(4634730800, 4634730880, 4634730960)

(3, 2, 2)

julia> ijk_array[1] = (1,4,2)

(1, 4, 2)

...

3.3 Indices and Ranges

When we are dealing with indices of arrays—vectors, matrices, or any other arrays—a range will be useful. If we want a set of indices from 1 to 9, we can simply do 1:9. If we want steps of 2, we do 1:2:9. Suppose we have a vector \( \vect{a} \):

julia> a = [10; 20; 30; 40; 50; 60; 70; 80; 90]

9-element Array{Int64,1}:

10

20

30

40

50

60

70

80

90

If we want the first three elements, we can do:

julia> a[1:3]

3-element Array{Int64,1}:

10

20

30

Some other examples:

julia> a[1:3:9]

3-element Array{Int64,1}:

10

40

70

where 1:3:9 refers to 1, 4, and 7. Although we specified 9 in 1:3:9, it didn’t reach 9.

The last index can be accessed by using a special keyword end. The last three elements can be accessed by:

julia> a[end-2:end]

3-element Array{Int64,1}:

70

80

90

We can also assign some values using a range:

julia> b = [200; 300; 400]

3-element Array{Int64,1}:

200

300

400

julia> a[2:4] = b

3-element Array{Int64,1}:

200

300

400

julia> a

9-element Array{Int64,1}:

10

200

300

400

50

60

70

80

90

We can also use ranges to define an array. Just toss a range to the collect() function:

julia> c = collect(1:2:9)

5-element Array{Int64,1}:

1

3

5

7

9

Suppose we have a matrix \( \mat{A} \):

julia> A= [1 2 3; 4 5 6; 7 8 9]

3x3 Array{Int64,2}:

1 2 3

4 5 6

7 8 9

The second column of \( \mat{A} \) can be accessed by:

julia> A[:, 2]

3-element Array{Int64,1}:

2

5

8

The second to third columns of \( \mat{A} \):

julia> A[:, 2:3]

3×2 Array{Int64,2}:

2 3

5 6

8 9

The third row can be accessed by:

julia> A[3, :]

3-element Array{Int64,1}:

7

8

9

Note that it returns a column vector. To obtain a row vector, one may consider:

julia> A[3:3, :]

1×3 Array{Int64,2}:

7 8 9

The second to third rows can be obtained by:

julia> A[2:3, :]

2×3 Array{Int64,2}:

4 5 6

7 8 9

3.4 Printing Messages

Displaying messages on the screen is the simplest tool for debugging and checking the computational results. This section introduces functions related to displaying and printing messages.

The most frequently used printing function would be println() and print(). We can simply do:

julia> println("Hello World")

Hello World

The difference between the two functions is that println() adds an empty line after it.

julia> print("Hello "); print("World"); print(" Again")

Hello World Again

julia> println("Hello "); println("World"); println(" Again")

Hello

World

Again

julia>

Combining the custom text with values in a variable is easy:

julia> a = 123.0

123.0

julia> println("The value of a = ", a)

The value of a = 123.0

julia> println("a is $a, and a-10 is $(a-10).")

a is 123.0, and a-10 is 113.0.

It works for arrays:

julia> b = [1; 3; 10]

3-element Array{Int64,1}:

1

3

10

julia> println("b is $b.")

b is [1,3,10].

julia> println("The second element of b is $(b[2]).")

The second element of b is 3.

More advanced functionalities are provided by the @printf macro, which uses the style of printf() of C. This macro is provided by the Printf package. Here is an example:

julia> using Printf

julia> @printf("The %s of a = %f", "value", a)

The value of a = 123.000000

The first argument is the format specification: it involves a string with symbols like %s and %f. In the format specification, %s is a placeholder for a string like "value", and %f is a placeholder for a floating number like a. The placeholder for an integer is %d.

The @printf macro is very useful when we want to print a series of numbers whose digit number differ, but we want to give some good alignment options. Suppose

c = [ 123.12345 ;

10.983 ;

1.0932132 ]

If we just use println(), we obtain:

julia> for i in 1:length(c)

println("c[$i] = $(c[i])")

end

c[1] = 123.12345

c[2] = 10.983

c[3] = 1.0932132

Not so pretty. Use @printf:

julia> for i in 1:length(c)

@printf("c[%d] = %7.3f\n", i, c[i])

end

c[1] = 123.123

c[2] = 10.983

c[3] = 1.093

where %7.3 indicates that we want the total number of digits to be 7 (including the decimal point) and the number of digits below the decimal point to be 3. In addition, \n means a new line.

There is another macro called @sprintf, which is basically the same as @printf, but it returns a string, instead of printing it on the screen. For example:

julia> str = @sprintf("The %s of a = %f", "value", a)

julia> println(str)

The value of a = 123.000000

For more format specifiers, see http://www.cplusplus.com/reference/cstdio/printf/.

3.5 Collection, Dictionary, and For-Loop

When we repeat a similar job for multiple times, we use a for-loop. For example:

julia> for i in 1:5

println("This is number $i.")

end

This is number 1.

This is number 2.

This is number 3.

This is number 4.

This is number 5.

A for-loop has a structure of

for i in I

# do something here for each i

end

where I is a collection. The most common collection type in computing is a range, like 1:5 in the above example.

If you want to stop at a certain point, you can break the loop:

julia> for i in 1:5

if i >= 3

break

end

println("This is number $i.")

end

This is number 1.

This is number 2.

Another very useful collection type is Dictionary. A dictionary has keys and values. For example, suppose we have the following keys and values:

my_keys = ["Zinedine Zidane", "Magic Johnson", "Yuna Kim"]

my_values = ["football", "basketball", "figure skating"]

We can create a dictionary:

julia> d = Dict()

Dict{Any,Any} with 0 entries

julia> for i in 1:length(my_keys)

d[my_keys[i]] = my_values[i]

end

julia> d

Dict{Any,Any} with 3 entries:

"Magic Johnson" => "basketball"

"Zinedine Zidane" => "football"

"Yuna Kim" => "figure skating"

When we use a dictionary, the order of elements saved in the dictionary should not be important. Note in the dictionary d, the order is not same as the order in k and v. If the order is important, we have to be careful with dictionaries.

Using the dictionary d defined above, we can, for example, do something like:

julia> for (key, value) in d

println("$key is a $value player.")

end

Magic Johnson is a basketball player.

Zinedine Zidane is a football player.

Yuna Kim is a figure skating player.

We can also add a new element by:

julia> d["Diego Maradona"] = "football"

"football"

julia> d

Dict{Any,Any} with 4 entries:

"Magic Johnson" => "basketball"

"Zinedine Zidane" => "football"

"Diego Maradona" => "football"

"Yuna Kim" => "figure skating"

For a network, suppose we have the following data:

links = [ (1,2), (3,4), (4,2) ]

link_costs = [ 5, 13, 8 ]

We can create a dictionary for this data:

julia> link_dict = Dict()

Dict{Any,Any} with 0 entries

julia> for i in 1:length(links)

link_dict[ links[i] ] = link_costs[i]

end

julia> link_dict

Dict{Any,Any} with 3 entries:

(1,2) => 5

(4,2) => 8

(3,4) => 13

Then we can use it as follows:

julia> for (link, cost) in link_dict

println("Link $link has cost of $cost.")

end

Link (1,2) has cost of 5.

Link (4,2) has cost of 8.

Link (3,4) has cost of 13.

For more information on collection and dictionary, please refer to the official document4. There are many convenient functions available.

Sometimes while-loops are more useful than for-loops. For the usage of while-loops and other flow controls, see the official document5.

3.6 Function

Just like any mathematical function, functions in Julia accept inputs and return outputs. Consider a simple mathematical function:

We can create a Julia function for this as follows:

function f(x,y)

return 3x + y

end

Simple. Call it:

julia> f(1,3)

6

julia> 3 * ( f(3,2) + f(5,6) )

96

Alternatively, you can define the same function in more compact form, called “assignment form”:

julia> f(x,y) = 3x+y

f (generic function with 1 method)

julia> f(1,3)

6

Functions can have multiple return values. For example:

function my_func(n, m)

a = zeros(n,1)

b = ones(m,1)

return a, b

end

We can receive return values as follows:

julia> x, y = my_func(3,2)

([0.0; 0.0; 0.0], [1.0; 1.0])

julia> x

3x1 Array{Float64,2}:

0.0

0.0

0.0

julia> y

2x1 Array{Float64,2}:

1.0

1.0

When any function f is defined for scalar quantities, we can use f. for vectorized operations. For example, consider sqrt(), which is defined for a scalar quantity:

julia> sqrt(9)

3.0

julia> sqrt([9 16])

ERROR: DimensionMismatch("matrix is not square: dimensions are (1, 2)")

For a vector quantity, we can use sqrt.() as follows:

julia> sqrt.([9 16])

1×2 Array{Float64,2}:

3.0 4.0

Note that the square-root operation is applied to each element.

Adding a dot to the end of the function name can be applied any function.

julia> myfunc(x) = sin(x) + 3*x

myfunc (generic function with 1 method)

julia> myfunc(3)

9.141120008059866

julia> myfunc([5 10])

ERROR: DimensionMismatch("matrix is not square: dimensions are (1, 2)")

julia> myfunc.([5 10])

1×2 Array{Float64,2}:

14.0411 29.456

More details about functions are found in the official document6.

3.7 Scope of Variables

In any programming language, it is important to understand what variables can be accessed where. Let’s consider the following code:

function f(x)

return x+2

end

function g(x)

return 3x+3

end

In this code, although the same variable name x is used in two different functions, the two x variables do not conflict. It is because they are defined in different scope blocks. Examples of scope blocks are function bodies, for loops, and while loops.

When a variable is defined or first introduced, the variable is accessible within the scope block and its sub scope blocks. As an example, consider a script file with the following code:

function f(x)

return x+a

end

function run()

a = 10

return f(5)

end

run()

This will generate an error. To fix the problem, we should define or introduce first the a variable in the parent scope block, which is outside the function blocks. A revised code is:

function f(x)

return x+a

end

function run()

return f(5)

end

a = 10

run()

which returns:

15

Let’s consider another example.

function f2(x)

a = 0

return x+a

end

a = 5

println(f2(1))

println(a)

What will be the printed values of f2(1) and a? Quite confusing. If you can avoid codes like the above example, I think it will be the best. The same a variable name is used in two different places, and it can be quite confusing sometimes. It may lead to serious bugs in your codes. The result of the above example is:

1

5

To better control the scope of variables, we can use keywords like global, local, and const. I—who usually write short codes for algorithm implementations and optimization modeling—think it is best to avoid same variables names in two different code blocks.

Some would write the above example as follows:

function f3(x)

_a = 0

return x + _a

end

a = 5

println(f3(1))

println(a)

where the underscore in front of a indicates it is a local variable.

Some others would write the above example as follows:

function f4(x, a)

return x + a

end

a = 5

println(f4(1, a))

println(a)

which makes it clear that a is a function argument passed from outside the function block.

Suppose we are writing a code to compute the sum of a vector’s elements. One may write:

a = [1 2 3 4 5]

s = 0

for i in 1:length(a)

s += a[i]

end

If you run the above code in REPL, it will generate an error: “s not defined.” In Julia, a for loop creates a scope block and there is an issue regarding global versus local variables. But the following code works fine:

function my_sum(a)

s = 0

for i in 1:5

s += a[i]

end

return s

end

a = [1; 2; 3; 4; 5]

my_sum(a)

Since function has created a scope block already, the for loop inside is already part of the scope block.

For more detailed explanation and more examples, please consult with the official document7.

3.8 Random Number Generation

In scientific computation, we often need to generate random numbers. Monte Carlo Simulation is such a case. We can generate a random number from the uniform distribution between 0 and 1 by simply calling rand():

julia> rand()

0.8689474478700132

julia> rand()

0.33488929348173135

The generated number will be different for each call.

We can also create a vector of random numbers, for example of size 5:

julia> rand(5)

5-element Array{Float64,1}:

0.848729

0.18833

0.591469

0.59092

0.0262999

or a matrix of random numbers, for example of size 4 by 3:

julia> rand(4,3)

4x3 Array{Float64,2}:

0.34406 0.335058 0.261013

0.34656 0.488157 0.600716

0.0110059 0.0919956 0.501252

0.894159 0.301035 0.4308

Random numbers from Uniform\( [0,100] \):

rand() * 100

A vector of \( n \) random numbers from Uniform\( [a,b] \):

rand(n) * (b-a) + a

We can also use rand() for choosing an index randomly from a range:

julia> rand(1:10)

8

julia> rand(1:10)

2

Similarly, Julia provides a function called randn() for the standard Normal distribution with mean 0 and standard deviation 1, or \( N(0,1) \).

julia> randn(2,3)

2x3 Array{Float64,2}:

2.31415 -0.309773 -0.0174724

-0.316515 0.514558 -1.53451

To generate 10 random numbers from a general Normal distribution \( N(\mu, \sigma^2) \):

julia> randn(10) .* sigma .+ mu

10-element Array{Float64,1}:

52.2694

51.4202

45.4651

51.9061

51.1799

47.272

47.585

48.4993

54.2316

46.5286

where mu=50 and sigma=3 are used. Since randn(10) is a vector and sigma and mu are scalars, vectorized operations .* and .+ are used.

Perhaps, we can write our own function for \( N(\mu, \sigma^2) \):

function my_randn(n, mu, sigma)

return randn(n) .* sigma .+ mu

end

and call it:

julia> my_randn(10, 50, 3)

10-element Array{Float64,1}:

48.0865

47.7267

47.3458

48.2318

53.5715

54.3249

53.3419

46.0114

49.7636

56.0803

For any other advanced usages related to probabilistic distributions, the StatsFuns package is available from the Julia Statistics group8. We need to first install the package:

julia> using Pkg

julia> Pkg.add("StatsFuns")

To use functions available in the StatsFuns package, we first load the package:

julia> using StatsFuns

For a Normal distribution with \( \mu=50 \) and \( \sigma=3 \), we set:

julia> mu = 50; sigma = 3;

The probability density function (PDF) value evaluated at 52:

julia> normpdf(mu, sigma, 52)

0.10648266850745075

The cumulative distribution function (CDF) value evaluated at 50:

julia> normcdf(mu, sigma, 50)

0.5

The inverse of CDF for probability 0.5:

julia> norminvcdf(mu, sigma, 0.5)

50.0

For many other probability distributions such as Binomial, Gamma, and Poisson distributions, similar functions are available from the StatsFuns package9.

3.9 File Input/Output

A typical flow of computing in operations research, or any other computational sciences, is to read data from files, to run some computations, and to write the results on an output files for records. File read/write or input/output (I/O) is a very useful and important process. Suppose we have a data file named data.txt that looks like:

code/chap3/data.txt

This is the first line.

This is the second line.

This is the third line.

We can open the file and read all lines from the file as follows:

datafilename = "data.txt"

datafile = open(datafilename)

data = readlines(datafile)

close(datafile)

Then data is an array with each line being an element. Since data contains what we need, we close the file.

julia> data

3-element Array{String,1}:

"This is the first line."

"This is the second line."

"This is the third line."

We usually access each line in a for-loop:

for line in data

println(line)

# process each line here...

end

Writing the results to files is also similarly done.

outputfilename = "results1.txt"

outputfile = open(outputfilename, "w")

print(outputfile, "Magic Johnson")

println(outputfile, " is a basketball player.")

println(outputfile, "Michael Jordan is also a basketball player.")

close(outputfile)

The result looks like:

code/chap3/results1.txt

Magic Johnson is a basketball player.

Michael Jordan is also a basketball player.

By using "a" option, we can append to the existing file:

outputfilename = "results2.txt"

outputfile = open(outputfilename, "a")

println(outputfile, "Yuna Kim is a figure skating player.")

close(outputfile)

The result will read:

code/chap3/results2.txt

Magic Johnson is a basketball player.

Michael Jordan is also a basketball player.

Yuna Kim is a figure skating player.

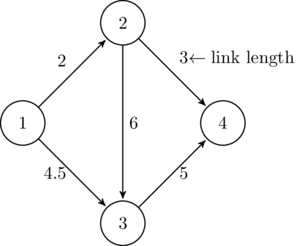

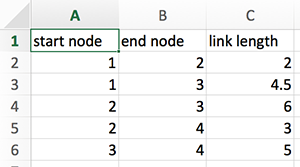

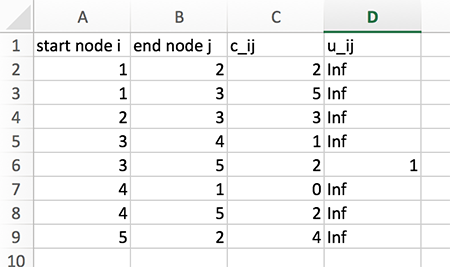

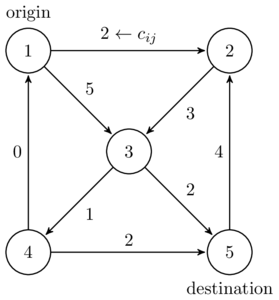

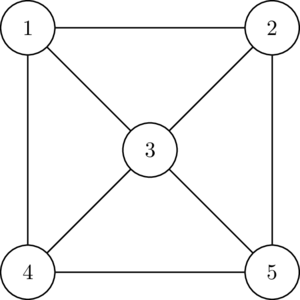

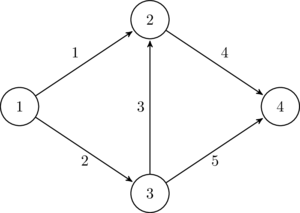

The most important data format may be comma-separated-values files, called CSV files. Data in each line is separated by commas. For example, consider the simple network presented in Figure 3.1. This network may be represented as the following tabular data:

| start node | end node | link length |

| 1 | 2 | 2 |

| 1 | 3 | 4.5 |

| 2 | 3 | 6 |

| 2 | 4 | 3 |

| 3 | 4 | 5 |

In spreadsheet software like Excel, you can enter as follows

and save it as ‘data.csv’. When you save it in Excel, you need to choose ‘Windows Comma Separated (.csv)’, instead of just ‘Comma Separated Values (.csv)’. CSV files are basically text files. The saved data.csv file will look like:

code/chap3/data.csv

start node,end node,link length

1,2,2

1,3,4.5

2,3,6

2,4,3

3,4,5

We read this CSV file as follows:

using DelimitedFiles

csvfilename = "data.csv"

csvdata = readdlm(csvfilename, ',', header=true)

data = csvdata[1]

header = csvdata[2]

With the header=true option, we can separate the header from the data. We obtained data as an array of Float64:

julia> data

5x3 Array{Float64,2}:

1.0 2.0 2.0

1.0 3.0 4.5

2.0 3.0 6.0

2.0 4.0 3.0

3.0 4.0 5.0

Since the third column represents link length, the type of Float64 is fine. On the other hand, the first and second columns represent the node indices, which must be integers. We change the type:

julia> start_node = round.(Int, data[:,1])

5-element Array{Int64,1}:

1

1

2

2

3

julia> end_node = round.(Int, data[:,2])

5-element Array{Int64,1}:

2

3

3

4

4

julia> link_length = data[:,3]

5-element Array{Float64,1}:

2.0

4.5

6.0

3.0

5.0

After some computation, suppose we obtained the following result:

value1 = [1.4; 3.1; 5.3; 2.7]

value2 = [4.3; 7.0; 3.6; 6.2]

which we want to save in the following format:

| node | first value | second value |

| 1 | 1.4 | 4.3 |

| 2 | 3.1 | 7.0 |

| 3 | 5.3 | 3.6 |

| 4 | 2.7 | 6.2 |

We can simply write the values on a file in the format we want:

resultfile = open("result.csv", "w")

println(resultfile, "node, first value, second value")

for i in 1:length(value1)

println(resultfile, "$i, $(value1[i]), $(value2[i])")

end

close(resultfile)

The result file looks like:

code/chap3/result.csv

node, first value, second value

1, 1.4, 4.3

2, 3.1, 7.0

3, 5.3, 3.6

4, 2.7, 6.2

3.10 Plotting

For plotting, I recommend the PyPlot package for most people. The PyPlot package calls the famous Python plotting module called matplotlib.pyplot. The power of PyPlot comes in at the cost of installing Python, which happens automatically. Read this Wiki page10 for an introduction. You can use PyPlot via the Plots package.

At this moment, I find the PyPlot package provides more powerful plotting tools that are suitable for generating plots for academic research papers—yes, Python has been around for a while. It just comes at the cost of installing additional software packages.

3.10.1 The PyPlot Package

To use PyPlot, we need a distribution of Python and matplotlib. Julia can automatically install a distribution for you, which will be private to Julia and will not be accessible outside of Julia. If you want to use your existing Python, please consult with the documentation of PyCall11.

The following process will automatically install Python and matplotlib.

- Open a new terminal window and run Julia. Initialize the

PYTHONenvironment variable:julia> ENV["PYTHON"] = "" ""

- Install PyPlot:

julia> using Pkg julia> Pkg.add("PyPlot")

An example is given below:

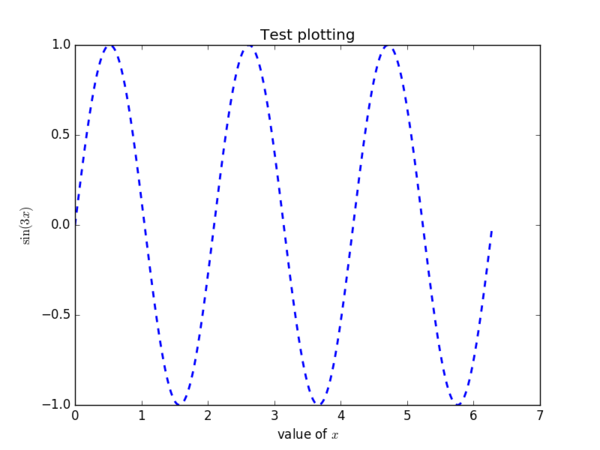

code/chap3/plot1.jl

using PyPlot

# Preparing a figure object

fig = figure()

# Data

x = range(0, stop=2*pi, length=1000)

y = sin.(3*x)

# Plotting with linewidth and linestyle specified

plot(x, y, color="blue", linewidth=2.0, linestyle="--")

# Labeling the axes

xlabel(L"value of $x$")

ylabel(L"\sin(3x)")

# Title

title("Test plotting")

# Save the figure as PNG and PDF

savefig("plot1.png")

savefig("plot1.pdf")

# Close the figure object

close(fig)

The result is:

When you use PyPlot for the first time, it will take some time to precompile. In the above code, L in front of "value of $x$" means that the string inside the quotation marks will be LATEX strings. When you have dollar signs ($) inside L"...", it is a combination of text and math symbols; when you don’t, like as in L"\sin(3x)", the entire text consists of math symbols only.

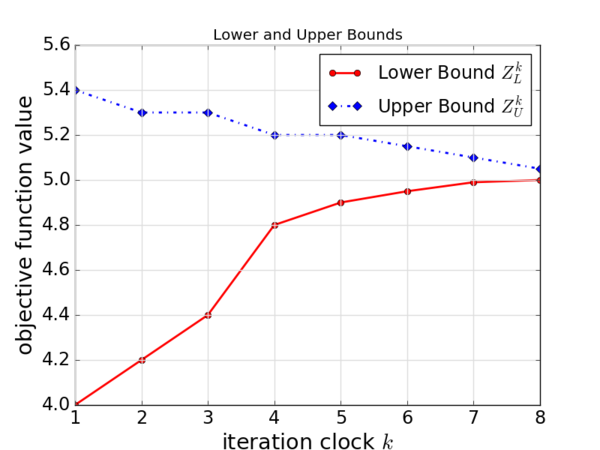

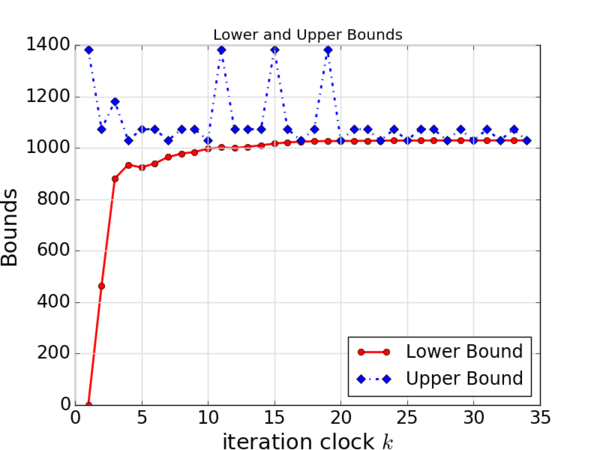

For another example, suppose that you obtained lower bounds and upper bounds from iterations of an algorithm. Then you want to put these two data in a single plot.

code/chap3/plot2.jl

using PyPlot

# Data

lower_bound = [4.0, 4.2, 4.4, 4.8, 4.9, 4.95, 4.99, 5.00]

upper_bound = [5.4, 5.3, 5.3, 5.2, 5.2, 5.15, 5.10, 5.05]

iter = 1:8

# Creating a new figure object

fig = figure()

# Plotting two datasets

plot(iter, lower_bound, color="red", linewidth=2.0, linestyle="-",

marker="o", label=L"Lower Bound $Z^k_L$")

plot(iter, upper_bound, color="blue", linewidth=2.0, linestyle="-.",

marker="D", label=L"Upper Bound $Z^k_U$")

# Labeling axes

xlabel(L"iteration clock $k$", fontsize="xx-large")

ylabel("objective function value", fontsize="xx-large")

# Putting the legend and determining the location

legend(loc="upper right", fontsize="x-large")

# Add grid lines

grid(color="#DDDDDD", linestyle="-", linewidth=1.0)

tick_params(axis="both", which="major", labelsize="x-large")

# Title

title("Lower and Upper Bounds")

# Save the figure as PNG and PDF

savefig("plot2.png")

savefig("plot2.pdf")

# Closing the figure object

close(fig)

The result is:.

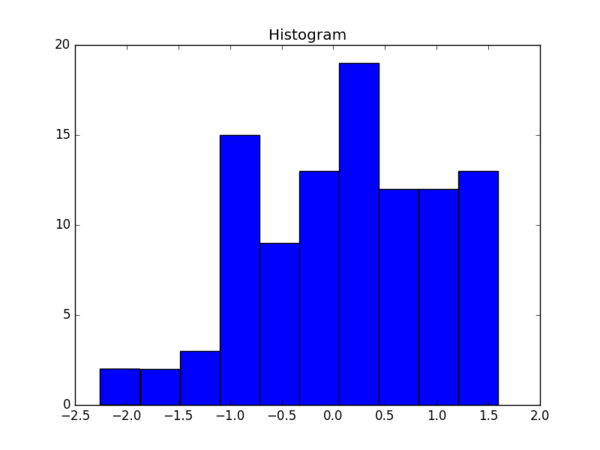

One can also create a histogram easily.

code/chap3/plot3.jl

using PyPlot

# Data

data = randn(100) # Some Random Data

nbins = 10 # Number of bins

# Creating a new figure object

fig = figure()

# Histogram

plt[:hist](data, nbins)

# Title

title("Histogram")

# Save the figure as PNG and PDF

savefig("plot3.png")

savefig("plot3.pdf")

# Closing the figure object

close(fig)

The result is:

Many examples and sample codes for using PyPlot are provided in the following link:

Since PyPlot calls functions from matplotlib, the following documents are also helpful.

3.10.2 Avoiding Type-3 Fonts in PyPlot

Some journal submission systems don’t like PDF files saved by matplotlib. It is usually because the system cannot handle some newer font styles, Type-3 fonts. There are two ways:

- Method 1. Using options of

matplotlib: We can specifically tell matplotlib to avoid Type-3 fonts as explained in this link.15 Create a file calledmatplotlibrc(without any extension in the filename) and place it in the same directory as the Julia script. Put the following commands in thematplotlibrcfile:ps.useafm : True pdf.use14corefonts : True text.usetex : True

- Method 2. Using the

pgfpackage of LATEX: Instead of using the commandsavefig("myplot.pdf"), one can usesavefig("myplot.pgf"), which will save the figure as a set of LATEX commands that uses thepgfpackage. Then use the following command to include the figure in the main LATEX document:\begin{figure} \centering \resizebox{0.7\textwidth}{!}{\input{myplot.pgf}} \caption{Figure caption goes here..} \label{fig:myplot} \end{figure}

Don’t forget to include

\usepackage{pgf}in the preamble of your main LATEX document.

Chapter 4 Selected Topics in Numerical Methods

Although this book does not aim to cover details of numerical methods and algorithms, this chapter will go over very basics of selected topics. Namely, curve fitting, numerical differentiation, and numerical integration are briefly explained. While students and researchers in operations research may not directly use these methods in their own problem solving, the concepts behind these fundamental topics are often useful to understand more complicated and advanced methods. It is also helpful to recognize how related computer software for numerical computations would be designed and what the limitations are.

4.1 Curve Fitting

From data collection or experiment results, we may obtain discrete data sets such as

where \( x_i \) is an input value and \( y_i \) is an output value for each \( i=1,...,n \). For example, \( x_i \) could be the price of a popular book in time period \( i \), and \( y_i \) is the corresponding sales volume in time period \( i \). Instead of having this discrete data set, we often want to represent the relationship between \( x \) and \( y \) as an analytical expression, such as a linear function:

or an exponential function

In general, a mathematical function with a vector of parameters \( \vect{\beta} \)

that makes sense in the context of \( x \), \( y \), and the relationship. Curve fitting aims to find the values of parameters used in the function form \( f(\cdot; \vect{\beta}) \) so that the obtained analytical functional form is the closest to the original discrete data set.

Related to curve fitting, interpolation finds the values of parameters so that the analytical function passes through the discrete data points. This approach assumes that the discrete data set is accurate and exact. When we use polynomial functions for interpolation—called ‘polynomial interpolation’—available methods are Lagrange’s Method, Newton’s Method, and Neville’s Method.

Instead of using only one polynomial function for the entire data set, we can use a piecewise polynomial function, a function whose segments are separate polynomial functions. The most popular choice is to use a piecewise cubic function, or cubic spline, and the method is called ‘cubic spline interpolation’. For interpolation methods, most books with ‘numerical methods’ or ‘numerical analysis’ in the title are helpful; see Kiusalaas (2013)1 for example.

In curve fitting, the objective is to determine \( \vect{\beta} \) in \( f(\cdot; \vect{\beta}) \) to match the values of \( f(x_i; \vect{\beta}) \) to \( y_i \) for all \( i=1,...,n \) as much as we can. The definition of the best match or best fit depends on one’s definition. The most popular definition is based on the least-squares. That is, we aim to minimize

by optimally choosing \( \vect{\beta} \). This is a nonlinear optimization problem in general. When we want a linear function for \( f(x) \)—called linear regression—the problem becomes a quadratic optimization problem, which can be solved relatively easily.

Finding an optimal \( \vect{\beta} \) is related to solving a system of equations:

where \( m \) is the number of parameters. In case of linear regression, the problem is to solve a system of linear equations.

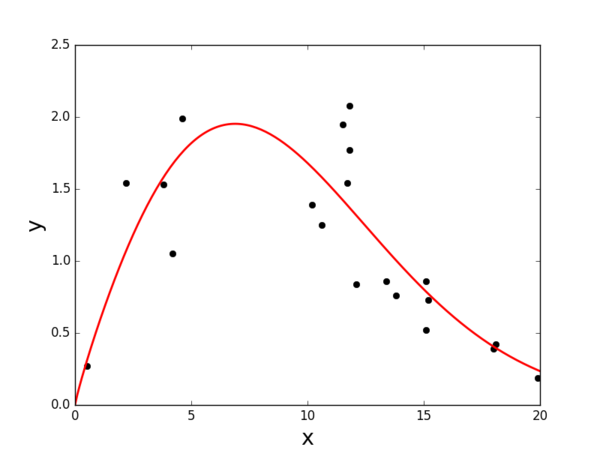

For general nonlinear least-squares fit, the Levenberg-Marquardt algorithm is popular. See Nocedal and Wright (2006)2 for details. In Julia, the LsqFit package3 from the JuliaOpt group implements the Levenberg-Marquardt algorithm. First add the package:

julia> using Pkg

julia> Pkg.add("LsqFit")

Suppose we have the following data set:

xdata = [ 15.2; 19.9; 2.2; 11.8; 12.1; 18.1; 11.8; 13.4; 11.5; 0.5;

18.0; 10.2; 10.6; 13.8; 4.6; 3.8; 15.1; 15.1; 11.7; 4.2 ]

ydata = [ 0.73; 0.19; 1.54; 2.08; 0.84; 0.42; 1.77; 0.86; 1.95; 0.27;

0.39; 1.39; 1.25; 0.76; 1.99; 1.53; 0.86; 0.52; 1.54; 1.05 ]

We would like to use the following function form:

To determine \( \vect{\beta} \), we prepare a curve fitting model as a function that returns a vector:

function model(xdata, beta)

values = similar(xdata)

for i in 1:length(values)

values[i] = beta[1] * ((xdata[i]/beta[2])^(beta[3]-1)) *

(exp( - (xdata[i]/beta[2])^beta[3] ))

end

return values

end

This can be equivalently written as:

model(x,beta) = beta[1] * ((x/beta[2]).^(beta[3]-1)) .*

(exp.( - (x/beta[2]).^beta[3] ))

where .^ and .* represent element-wise operations.

With some initial guess on \( \vect{\beta} \) as [3.0, 8.0, 3.0], we do

using LsqFit

fit = curve_fit(model, xdata, ydata, [3.0, 8.0, 3.0])

The obtained parameter values are accessed by:

julia> beta = fit.param

3-element Array{Float64,1}:

4.459414325729536

10.254403821607001

1.8911376587646551

and the error estimates for fitting parameters are accessed by:

julia> margin_error(fit)

3-element Array{Float64,1}:

1.240597479103558

1.410107749179365

0.4015751605563139

The result of curve fitting is presented in Figure 4.1. The complete code is provided:

code/chap4/curve_fit.jl

using LsqFit # for curve fitting

using PyPlot # for drawing plots

# preparing data for fitting

xdata = [ 15.2; 19.9; 2.2; 11.8; 12.1; 18.1; 11.8; 13.4; 11.5; 0.5;

18.0; 10.2; 10.6; 13.8; 4.6; 3.8; 15.1; 15.1; 11.7; 4.2 ]

ydata = [ 0.73; 0.19; 1.54; 2.08; 0.84; 0.42; 1.77; 0.86; 1.95; 0.27;

0.39; 1.39; 1.25; 0.76; 1.99; 1.53; 0.86; 0.52; 1.54; 1.05 ]

# defining a model

model(x,beta) = beta[1] * ((x/beta[2]).^(beta[3]-1)) .*

(exp.( - (x/beta[2]).^beta[3] ))

# run the curve fitting algorithm

fit = curve_fit(model, xdata, ydata, [3.0, 8.0, 3.0])

# results of the fitting

beta_fit = fit.param

errors = margin_error(fit)

# preparing the fitting evaluation

xfit = 0:0.1:20

yfit = model(xfit, fit.param)

# Creating a new figure object

fig = figure()

# Plotting two datasets

plot(xdata, ydata, color="black", linewidth=2.0, marker="o", linestyle="None")

plot(xfit, yfit, color="red", linewidth=2.0)

# Labeling axes

xlabel("x", fontsize="xx-large")

ylabel("y", fontsize="xx-large")

# Save the figure as PNG and PDF

savefig("fit_plot.png")

savefig("fit_plot.pdf")

# Closing the figure object

close(fig)

4.2 Numerical Differentiation

Given a function \( f(x) \), we often need to compute its derivative without actually differentiating the function. That is, without the analytical form expressions of \( f'(x) \) or \( f''(x) \), we need to compute them numerically. This process of numerical differentiation is usually done by finite difference approximations.

The idea is simple. The definition of the first-order derivative is

from which we obtain a finite difference approximation:

for sufficiently small \( h>0 \). This approximation is called the forward finite difference approximation. The backward approximation is

and the central approximation is

Suppose we have discrete points \( x_1, x_2, ..., x_n \). For mid-points from \( x_2 \) to \( x_{n-1} \), we can use any finite difference approximation and typically prefer the central approximation. At the boundary points \( x_1 \) and \( x_n \), the central approximation is unavailable; hence we need to use the forward approximation for \( x_1 \) and the backward approximation for \( x_n \).

To be more precise, let us consider the following Taylor series expansions:

From (4.1), we obtain the forward approximation:

From (4.2), we obtain the backward approximation:

By subtracting (4.2) from (4.1), we obtain the central approximation

Note that the central approximation has a higher-order truncation error \( \Oc(h^2) \) than the forward and backward approximations, which means typically smaller errors for sufficiently small \( h \).

The second-order derivative is similarly approximated. By adding (4.1) and (4.2), we obtain

which leads to the central finite difference approximation of the second-order derivative:

We can similarly derive the forward and backward approximations of the second-order derivative. We can also approximate the third- and fourth-order derivatives. See Kiusalaas (2013)4 for details.

In Julia, the Calculus package is available for numerical differentiation. First install and import the package:

using Pkg

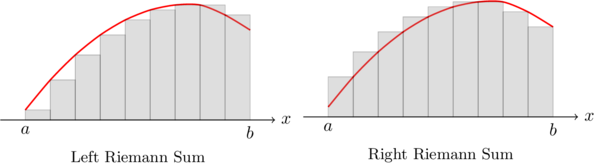

Pkg.add("Calculus")